Augmented Vision will be available in 2015.

Am I prophetic? Delusional? Or merely guessing? How does an deluded prophetic hand-waving guess sound?

The reality is the development of complex systems like Augmented Vision will take something more than putting the various technologies together. AV will require a change in the zeitgeist similar to the iPhone. But that’s not what I’m here to talk about.

While the magic moment–the tipping point–will take some unknown trigger. The technologies will have to be available to support it. The iPhone couldn’t have existed five years ago, just like AV can’t exist right now.

The first thing to ask is, what is Augmented Vision? I will attempt to define the term, but others may differ with it. That is okay, as I am only trying to place a target in space to draw an arrow to (or in this case, many arrows).

Definition of Augmented Vision: an unobtrusive self-contained human based system that creates an augmented reality experience allowing the user to interact with any object in the populated world. Let’s break that down into its pieces.

1 – Unobtrusive self-contained: the ability for the devices to be fashionable, easy-to-wear and comfortable.

2 – Human oriented: centered around the everyday human experience.

3 – Creates an AR experience: the cloud is a mature system overflowing with content.

4 – Interact with any object in the populated world: in our modern surroundings, anything can be identified, located and learned from.

I’m not speaking of an AV experience that makes reality and virtual difficult to decipher like Denno Coil. I’m thinking of AV as a tool to enhance the everyday living experiece just as the way other technologies have like the iPhone.

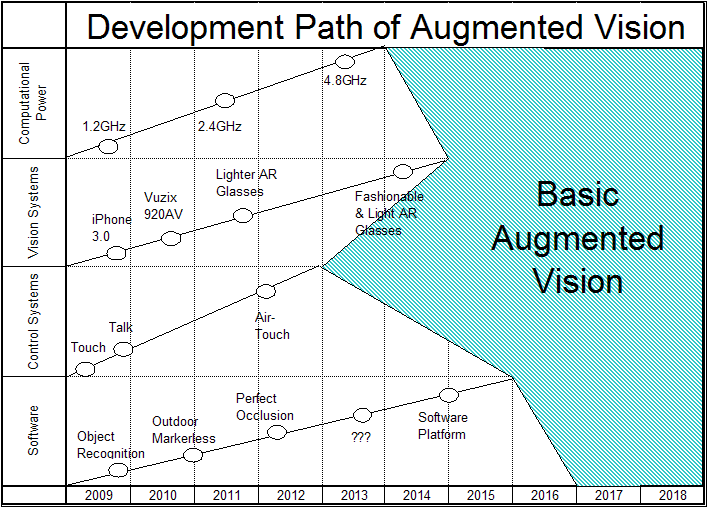

Computational Power – I’m using the specification difference from the iPhone 1.0 to 3.0 and considering Moore’s Law to generate a linear projection. I think 4.8GHz would be plenty of processing power to perform most operations, and Rouli pointed out to me that hard-core algorithms will be computed in the cloud like SREngine and Alcatel Lucent’s initiative. So computational power won’t be the limiting factor.

Vision Systems – Not much to go on here except the release of the AV920 from Vuzix in the fall. Looking at the cellphone development cycle from the last ten years, I’m thinking lightweight, fashionable and comfortable AV glasses will be available to the masses in five years.

Control systems – Talk, type, touch and think. Typing and touch are the current system. Think is too far off to be realistic for a human based system. This leaves talking and “air-touch” as the probably control systems. Much of the technology is already known, so control systems won’t limit AV.

Software – This is the biggest unknown. What makes up the bag of tricks required to make an AV platform similar to an iPhone? Object recognition, outdoor markerless, perfect occlusion, non-rigid surfaces, optimizing frames-per-second, geolayers, etc. I placed some items on the progression, but its hard to say which ones will be needed and how they fit together. The tools eventually needed will depend on the creativity of the manufacturer. I could speculate further but this is a question better answered by someone else.

While this post is mostly speculation based on the available research information and the limited commercial products on the market, I think it is a useful exercise to see the direction the technology is headed. Looking at the components required and seeing the gaps in development, an entrepreneur might use the opportunity to fill in the gap with the right product.

Once created, the basic Augmented Vision will be more like Terminator Vision, but it will create a platform to launch from. Will it grow until it reaches Augmented Vision that blurs reality and the virtual like Denno Coil or the Digital Sea? I might not find out in my lifetime, but until then, I’d be happy with an AV that gives me good hands-free directions to the nearest pub for a pint of Guinness.

Wow great post, this kinda sums up my thinking on this subject. If I may however, I have a couple of things to add

Firstly on the control systems front, I was thinking about that problem this morning (on the bus) and I think you are missing two contenders:

– brain-machine interfaces (2014-2015?) although crude at the moment, this is an active area of research. This is my favourite candidate, with unlimited potential if some of the tough nuts can be cracked.

– eye movement / expression recognition – the tech for this is already around, not sure how usable/suitable it would be; probably not a primary interface

Also, with smart clothing, as in Rainbow’s End, certain gestures could form part of an interface, although probably not a primary interface.

As for the software, I still maintain that it exists right now. The only problem is that it hasn’t been integrated. There are great algorithms out there for registration, 3D scene reconstruction, occlusion, location sensing, etc. What needs to happen is for some one with the passion, time and money to come along and put them all together. I would do it myself only I don’t have the time or the money!! 🙂

Anyway, great post, thanks.

I left the brain-machine interface off because, while possible right now, is probably not going to be in the easy-to-use, portable state anytime soon, but I do think its an eventuallity.

The smart clothing could be a part of the “air-touch”. Really it depends on how you want to configure the interface using your hands with a visual interface.

I also agree with your assessment of the software, mostly. Keep in mind a lot of those applications are very individual and haven’t been put together in a small handheld device platform.

Your last line says it best – “…someone with the passion, time and money to put them all together…”

[…] posts this week (he is a fierce contender for the top AR blogger spot), but his best was surely this one, where he interpolates current trends to come to the conclusion that Augmented Vision will be […]

I think your dates about right, allthough Id probably move a few things back/forward a little.

My own thinking controll-wise is the humble stylus being first.

Brain-wave based stuff and even eye-tracking require bigger leaps in portability and interpritation.

A 3D stylus could be made now, working much like wiimote in reverse. (just have two IR dots on either end of a stick), and the camera used for AR could also track its motions. Alternatively, you could use short-range radio triangulation to position it, but that will likely be more expensive.

I think for the moment a physical controller like this is cheaper and more achivable then an air-touch thing. Allthough I see air-touch as the long term preferance.

More specificaly, the long term will probably be a glove like system that can emulate almost all other controllers.

Think something like;

http://www.vrealities.com/cyber.html

As for other progress, imho, the key is to get a working Arn…an augmented reality network.

We gota stop using individual app’s and switch to having AR protocalls and browsers. It will never be mass adopted if users keep having to download special software for each augmentation they want to see.

My own preposal is having it like IRC, with visual layers of information corisponding to channels. This way people can log in/out of any layers they wish, having multiple streams of data up at once.

Moreso, an IRC like system lets people start their own channels on the fly, public or private, read only, or writable by others.

The augmented reality network is going to have to come from the major developers. Ori expressed during his interview with UgoTrade last week, so there is no reason for me to go into it further. I think that’s going to be part of the point of ISMAR2009.

I do like the analogy to IRC, though, because the platform is solid but the user can make adjustments to his/her individual experience.

Something completely off-topic: The conversion enhancing “Tweet this/Follow Me since you found this post through twitter” Box contains a link to twitter but not to your profile 😉

Nice post!

I’m waiting for video-headsets for about ten years now.

I have the AV920, but find it very disappointing.

What wows me more lately are applications on the Android phone like Wikitude and Google Sky Map.

So the first steps into ‘consumer’ AR will probably be a mobile phone camera with a screen on the other side that shows what the camera is seeing with extra information.

@ Anibal – That’s a bug with my widget. I need to find a new one that only uses the services I’m signed up on, not the one you found me with. I’ll have to fix that this week.

@ Onno – I assume you have the iWear AV920 which is a virtual reality system and not the wrap AV920 which is supposed to be used for AR because its see-through and has the “sunglasses” look. The only person I know that has used them is Ori Inbar and they were only a demo version. I’m hoping the wrap AV920 is the first step towards real AR goggles.

@ Anibal – Fixed the widget (WP Greetbox) with an upgrade. Shouldn’t give you the Twitter request now. Also moved it to the bottom of the posts. Always thought it was obnoxious up top. Thanks again for the feedback.

” Ori expressed during his interview with UgoTrade last week, so there is no reason for me to go into it further. I think that’s going to be part of the point of ISMAR2009.”

Fair enough!

Allthough if the “major developers” take too long, we might have to start doing it ourselfs 😉

I think the Arn could actualy be built on the IRC protocal itself, incidently. Not ideal by a long shot, but IRC channels sending xml formated links to web-hosted mesh’s (or links clients IP’s and port-numbers for temporay hosting)..could be used for a shared visual space.

Not the best version of what the Arn could be, but after Ori talked about doing things “now” with existing protocals, that came to my mind quite quickly.

Well I think if someone could organize a group of talented individuals to build some open source code using some of the current structures of the web, then you could move in that direction right now.

If you have some tangable ideas and are willing to organize the masses I’m sure we could put a call out to others. Are you willing to lead them? 🙂

Tom, you’re more than welcome, keep up the interesting posts, added you to my RSS-to-watch list 😉

Kind regards,

@AnibalDoRosario

[…] before I do that, I’d like to thank all those that read and commented on my Path to Augmented Vision post which surpassed my previous top post Automating the Digitalization of the World. Special […]

[…] The Future Digital Life :: The Path to Augmented Vision :: http … […]

[…] On my own site, my most popular posts have been: The Path To Augmented Vision, Creating the Cloud and How to Automate the Digitization of the World. The last one is from […]

[…] we’re years away from achieving augmented vision, the game Dead Space gives us a nice preview of some of the features that would be available. In […]

[…] however, is a long way from our current reality. We’re probably five years away from true augmented vision and then to begin to digitally construct the world, so we can bend reality, would take another […]

[…] past May, I predicted true Augmented Vision would occur around 2015. After the demonstrations on Monday, I’m not going to change my […]

[…] notable things that happened in May – My post about the Path to Augmented Vision, Programmer Joe talks AR at LOGIN, and 11 Industries to be Reinvented with Augmented […]