Augmented reality is only a medium (actually just a mashing together of other media), but content is king.

To make use of computer data and images superimposed on real life we need content, but creating that content can be time consuming and expensive. Instead, we have to find ways of leveraging the crowd to fill the cloud. As I’ve explained before, cataloging image databases will be one way we can build digital versions of the world.

Today, researchers at Google are presenting a paper on Computer Vision and Pattern Recognition (CVPR) in Miami, Florida. Using 40 million GPS tagged photos from Picasa and Panoramio and online tour webpages, they’ve been able to improve computer vision of major landmarks. The technology sounds similar to Microsoft’s Photosynth, but they may have different implications based on the form of the data.

The key point is that these technologies (either Google’s or Microsoft’s) will allow for the leveraging of information (in the form of pictures) being created daily and stored on the web. Not only is this data available, but due to its time signature, it can also help us reconstruct past events of significant importance. This will give us powerful tools for creating huge chunks of content for the cloud.

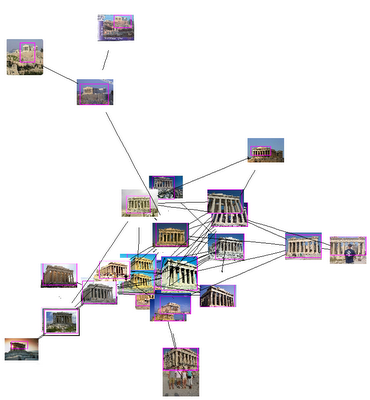

The below picture shows the visual representation of how their cluster recognition model works.

Google has shown us another way to use large, noisy datasets to automate the digitalization of the world.

Via Spatial Sustain.

Go Google! I know the conspiracy people are worried that they will take over the world, but I’m not sure that would be a bad thing. 🙂 Once again they are innovating.

Pretty good post. I just found your blog and wanted to say

that I’ve really liked reading your posts. Anyway

I’ll be subscribing to your feed and I hope you write again soon!

[…] Noah breaks onto the scene with his Touchless Glove Interface, and Goggle presents their paper on Computer Vision and Pattern Recognition (which later becomes […]